Enterprises don’t just need smart agents-they need traceable ones. This guide shows how to build AI tooling that is testable, governable, and compliant. When AI pilots stall at the edge of production, it’s rarely the model’s fault-it’s the tooling. Integration debt, vague interfaces, and weak guardrails turn clever demos into brittle systems. This paper is a blueprint for fixing that: we show how to design narrowly scoped, swappable tools that LLMs can call safely.

In AI development, excellence lies not just in a tool's capabilities but in its precision and reliability. Whether you're building an internal assistant or a customer-facing agent, a thoughtful engineering approach transforms fragile prototypes into robust, dependable assets. This means focusing on specificity, modularity, and security, and leveraging modern standards like the Model Context Protocol (MCP) to ensure your tools are both smart and safe.

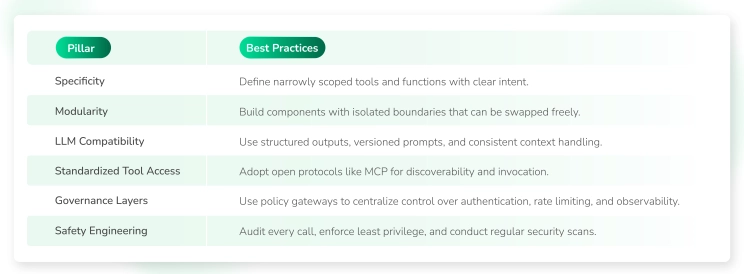

1. Engineering Principles: Specificity, Modularity, and LLM Compatibility

The foundation of a reliable AI toolset rests on three core principles:

- Specificity is key: Each tool component should have a narrow, well-defined purpose. Instead of creating a single, broad "do-everything" tool, build smaller, specialized ones-for example, a tool for "get customer data" and a separate one for "schedule meeting." This clarity reduces unpredictability and enhances safety by limiting a tool's potential scope of action.

- Modularity wins: Think of your AI system as a set of LEGO bricks, not a single block of concrete. By architecting components with clear boundaries and interfaces, you can easily test, update, or replace individual parts without disrupting the entire system. For example, frameworks like LangChain emphasize modularity, allowing you to swap out an embedding model or vector store without rebuilding your entire agent.

- LLM compatibility matters: Your tools must communicate cleanly with large language models (LLMs). This means designing components that use structured calls-for instance, well-defined JSON inputs and outputs. It's also crucial to maintain versioning for your prompts and context, just as you would for your code, to ensure consistent behaviour as the underlying models evolve.

2. Model Context Protocol (MCP): A Foundation for Tool Integration

To move beyond custom, one-off integrations, the industry needs open standards. This is where the Model Context Protocol (MCP) comes in.

- What is MCP? Introduced by Anthropic, MCP is a standardized, open-source framework that acts as a universal interface for AI systems. Think of it as a "USB-C port for AI integrations." It standardizes how AI agents, particularly LLMs, connect to and interact with external systems like databases, APIs, and other services.

- How it works: In an MCP-enabled workflow, the LLM acts as a client, making structured requests to an MCP server. This server exposes a list of available tools, along with their metadata, function signatures, and schemas. The agent can then dynamically discover these tools, invoke them, and receive structured responses, allowing it to complete multi-step tasks without needing to be pre-programmed for every possible action.

- Why it matters: MCP solves the "N × M integration problem," where developers would have to build a separate, custom connector for every LLM-tool pair. By adopting an open standard, major AI vendors like OpenAI, Google DeepMind, and others can ensure their systems are broadly compatible with a growing ecosystem of third-party tools.

3. Safety, Rate Limiting & Security in Agent-Tool Interactions

Standardization brings incredible power, but it also introduces new security risks. Building safe AI tools requires a vigilant approach to security engineering.

- Security risks of MCP: An open protocol could expose your systems to new threats. Without proper safeguards, you risk credential theft, unauthorized data access, and even malicious code execution. An LLM could be "tricked" through a prompt injection attack into using an exposed tool in a way that leaks sensitive information or performs a harmful action.

- Mitigation strategies: Proactive security is non-negotiable. Here are some key best practices:

- Authentication and Authorization: Implement strict access controls. An agent should only be able to use tools for which it has explicit permission, following the principle of "least-privilege."

- Rate Limiting and Auditing: Monitor and log every single tool call. Use rate limiting to prevent abuse and quickly detect anomalous behaviour.

- Input Validation and WAFs: Validate all inputs from the LLM to the tool to prevent injection attacks. Tools like a Web Application Firewall (WAF) can also help monitor and block suspicious requests.

- Vulnerability Scanning: Regularly scan your MCP servers and tools for security vulnerabilities and adversarial samples. This proactive approach helps you identify and fix flaws before they can be exploited.

4. Best Practices

Building AI tools that are both smart and safe demands a thoughtful engineering approach one that focuses on precision, modular design, protocol standardization, and an unwavering commitment to security. By mastering these technical foundations, your team can move beyond fragile prototypes and forge robust, reliable AI tools that power productivity, safeguard data integrity, and propel your business into the age of intelligent automation.

New to MCP? Pair this section with a short explainer video so readers see how a client discovers and invokes tools through an MCP server before you dive into schemas and policy gates.Model Context Protocol (MCP), clearly explained (why it matters)